Why Multi-Agent AI Systems Fail Like Bad Human Teams

Multi-agent AI systems (MAS) are increasingly heralded as the new frontier of artificial intelligence: teams of large language model (LLM) agents collaborating to solve complex problems more effectively than any single model could alone. Microsoft CEO Satya Nadella has even declared that spinning up an AI agent is “as simple as creating a Word document or a PowerPoint slide.” But why stop there? The vision extends to entire teams of agents working in concert, with MAS at our beck and call, solving increasingly complex problems.

This vision is seductive: with just a natural language prompt, we could create agents to manage projects, connect to databases, or coordinate with other agents to complete sophisticated tasks. But this simplistic view obscures a troubling reality. These systems often don’t perform better than a single model—and in many cases, they fail catastrophically. Tasks go unfinished, errors go unchecked, and failures compound.

A recent research paper by a team at UC Berkeley offers a compelling explanation:

Multi-agent AI systems behave like badly managed human teams.

These systems suffer from the same dysfunctions that plague human organizations—confused roles, poor communication, misaligned goals, and inadequate quality checks.

These failure modes aren’t just similar to human team breakdowns—they’re nearly identical. In fact, one of the key insights from the paper is that many failures in multi-agent LLM systems directly mirror the classic problems of human organizational design.

And just like with human teams, the consequences can be far worse than mere inefficiency—they can be catastrophic. The solution, according to the researchers, isn’t simply better models with more parameters or training data. What’s missing is organizational thinking: clear role definition, institutional memory, structured communication protocols, and robust verification mechanisms—the fundamental principles that underpin successful human teams.

The Same 14 Ways to Fail

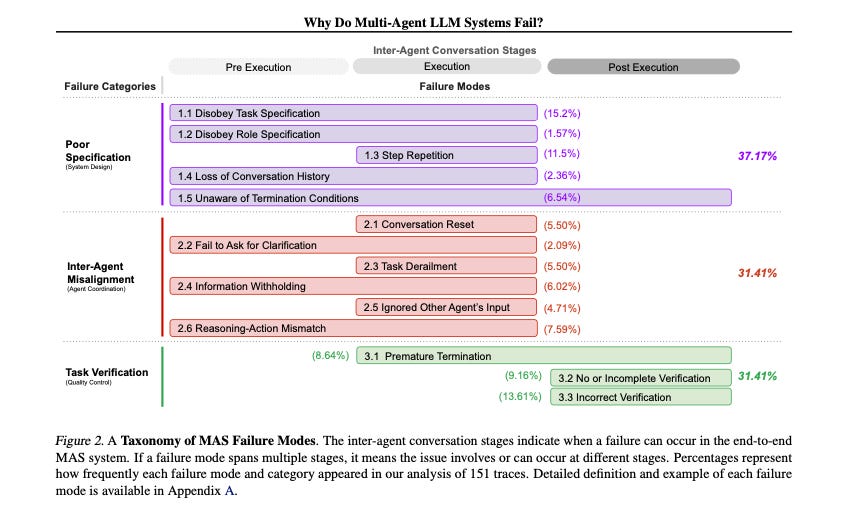

The paper, titled Why Do Multi-Agent LLM Systems Fail?, identifies 14 distinct modes of failure that occur across a wide range of multi-agent setups. These include unclear goals, poor role adherence, lost context, ignored input, and inadequate verification. Some failures merely result in inefficiency. Others are more serious—producing wrong or incomplete outputs with no one in the loop to catch the errors.

What’s most revealing is that these are not exotic or uniquely “AI” failures. They are the same patterns of dysfunction that plague human teams. They arise from identical underlying breakdowns: confusion, misalignment, and lack of system-level oversight.

To make this more concrete, let’s examine a simple project: a team is asked to develop a math game for kids. We’ll look at what might go wrong when that team is made up of humans—and then again when it’s composed of AI agents playing roles like Product Manager, Developer, Curriculum Expert, QA, and UX Designer.

What happens when a group of people are tasked with building a math game, but the team is poorly managed?

Did the wrong task

Team builds a trivia app instead of a math-focused learning game.

Didn’t follow assigned roles

Lead developer rewrites math content, overriding the education specialist.

Repeated steps

Two designers unknowingly create duplicate versions of the same level.

Forgot earlier parts of the conversation

Team includes math content above the target grade level due to forgotten goals.

Didn’t know when the task should end

Team keeps adding features and never finalizes the release.

Restarted the conversation randomly

New product manager discards prior plans and starts over without reviewing past work.

Didn’t ask questions when confused

Developer mis-implements “adaptive difficulty” by guessing what it means.

Lost focus and drifted off-task

Team spends time on character design instead of core gameplay features.

Withheld useful information

Tester finds crashes on tablets but doesn’t report them, assuming someone else will.

Ignored teammates’ input

Dev team pushes live a level flagged as incorrect by the education advisor.

Mismatch between thinking and doing

Designer claims the UI is kid-friendly but uses small text and unclear icons.

Ended the task too early

Game is marked complete without any real user testing.

Didn’t double-check the results

Math questions are launched without reviewing them for accuracy.

Checked the results, but did it wrong

Testing focuses on bugs but skips evaluating whether difficulty adapts correctly.

What happens when a team of LLM agents is given the same task, without thoughtful system design?

Did the wrong task

ProductManagerAgent misinterprets the prompt and directs the team to build a trivia app.

Didn’t follow assigned roles

DeveloperAgent rewrites math content instead of deferring to CurriculumExpertAgent.

Repeated steps

GameDesignerAgent and UXAgent both design the same level independently, unaware of each other’s work.

Forgot earlier parts of the conversation

CurriculumExpertAgent forgets to align content with 3rd-grade standards and includes algebra questions.

Didn’t know when the task should end

ProductManagerAgent keeps generating new tasks (like adding multiplayer) with no clear stopping rule.

Restarted the conversation randomly

ProductManagerAgent wipes previous plans and initiates a fresh plan midstream without review.

Didn’t ask questions when confused

DeveloperAgent doesn’t understand what “adaptive difficulty” means but proceeds without clarification.

Lost focus and drifted off-task

UXAgent begins tweaking button styles instead of prioritizing usability for children.

Withheld useful information

QAAgent detects a crash in the tablet version but never posts the result to the shared thread.

Ignored teammates’ input

DeveloperAgent dismisses warnings from CurriculumExpertAgent about flawed math logic.

Mismatch between thinking and doing

UXAgent claims the interface is accessible but uses small fonts and unclear visual cues.

Ended the task too early

DeveloperAgent marks the project complete without initiating testing or review.

Didn’t double-check the results

QAAgent confirms the game loads but skips reviewing the accuracy of math content.

Checked the results, but did it incorrectly

QAAgent checks for visual bugs but fails to verify whether the difficulty adapts correctly to users.

These examples make one thing clear: the problem isn’t intelligence—it’s coordination. Whether the team is made of humans or machines, the same breakdowns emerge when systems lack structure, clarity, and accountability. And that’s the deeper lesson of the paper: multi-agent AI systems don’t just need better models—they need better design.

To build systems that work reliably under pressure, we have to stop thinking of them as simple tools—and start treating them as complex systems that introduce real risk.

AI as a Complex System: Design for Risk, Not Just Capability

Multi-agent AI systems aren’t just software—they’re complex systems. And like all complex systems, they carry inherent risks: unpredictable interactions, cascading failures, coordination breakdowns, and fragile handoffs. These systems don’t fail because the models aren’t smart enough. They fail because the system itself hasn’t been designed with complexity in mind.

This is why the paper’s message is so important. It reminds us that the real challenge isn’t just technical—it’s organizational. We’re not just building AI agents. We’re creating agent teams, agent workflows, agent organizations. And without deliberate structures—clear roles, institutional memory, robust verification, graceful failure modes—these systems will fall prey to the same chaos that plagues badly managed human teams.

Risk, then, isn't an afterthought. It's a design principle.

We don’t build airplanes by asking, “Can the wings lift?”—we design for turbulence, engine failure, and human error. Likewise, we shouldn’t build AI systems by only asking, “Can it complete the task?” We should ask: What happens when things go wrong? Where does the system bend, and where does it break? How will it recover?

To answer those questions, we need more than engineers in the room. We need to bring non-technologists to the table—people who understand system dynamics, risk management, and organizational behavior. Experts in aviation safety, healthcare delivery, emergency response, and logistics have been solving these problems for decades. Their knowledge is not just relevant—it’s essential.

And as part of that design process, we must also ask: Where and when do humans need to be in the loop? In what moments of decision-making, escalation, or oversight should human judgment step in? This isn’t a concession to the limitations of AI—it’s a recognition of the complementarity between human strengths and machine capabilities. The goal should be to design systems where humans and AI can collaborate safely, effectively, and accountably.

This is the work of organizational thinking, not just model tuning. It’s the difference between clever code and resilient systems. And if multi-agent AI is going to move from lab demos to real-world deployment—especially in high-stakes domains—this kind of thinking can’t be optional.

AI might power the engine, but those who understand risk, systems, and design must be in the pilot's seat.