Using GenAI to Review Research Papers - Step 3: Crafting Targeted Questions

Welcome back to my series on using Generative AI (GenAI) to review research papers. This series documents my evolving methodology for analyzing papers with AI assistance. I want to be clear - I'm sharing my personal approach to understanding research papers better, using AI as a thinking partner.

Like many of us, I sometimes struggle with reading academic papers, and I've found that breaking down my reading process and using AI thoughtfully helps me grasp the content more thoroughly. While these techniques might eventually help with formal review work, right now I'm focused on improving my own comprehension and learning.

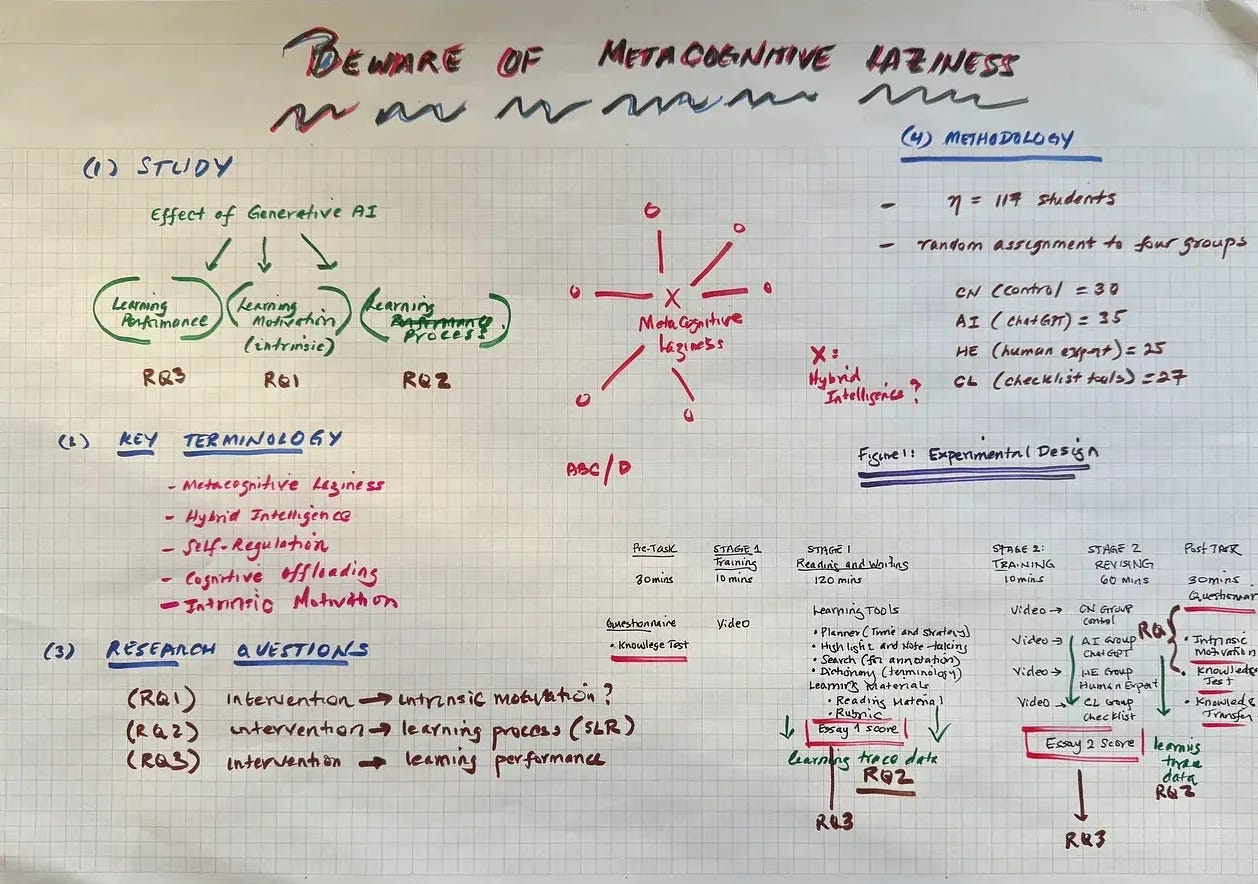

In my first post, I shared two fundamental steps in my review process. Rather than jumping straight to AI, I start with careful reading - at least two passes through the paper, while taking notes and focusing on key questions about research goals, methodology, and results. Next, following John McPhee's writing wisdom, I create a visual diagram of the paper's structure and progression.

Step 1. Read the research paper at least twice.

Step 2. Create visual diagram of paper’s structure and progression

After Steps 1 and 2, I should hopefully have a good overview of the research paper. I also have some preliminary thoughts about what I understand, what I don’t understand, and what I need to investigate further.

Today, I'll be applying Step 3 - generating a set of targeted questions to pose to multiple AI models. These questions are designed to systematically examine the paper's scope, methodology, and structure:

Once again, the paper I am analyzing is "Beware of Metacognitive Laziness: Effects of Generative Artificial Intelligence on Learning Motivation, Processes, and Performance." It's a particularly fitting choice as we'll be using AI to analyze research about AI's effects on learning.

At the end of Step 3, I've come to the following set of targeted questions which I will pose to AI.

Research Focus & Framing:

What are the three effects of Generative AI that the authors investigate?

How do the authors define each of these three areas of investigation?

What are the specific research questions that frame the experiment?

How effectively have the researchers framed their study?

Terminology & Concepts:

What key terms are used in the study and how are they defined?

Does the term "metacognitive laziness" have precedence in research literature?

Methodology & Structure:

What methodology did the researchers employ?

What was the study's sample size and group structure?

What are the major sections of the paper?

Are there appendices?

What is Figure 1?

Conclusion

By this point, I've already invested significant time in Steps 1, 2, and 3 - carefully reading the paper multiple times, creating a detailed structural diagram, and generating a set of guiding questions. I believe this preparation is crucial. Without a thorough understanding of the paper beforehand, I'd risk accepting AI-generated responses at face value or missing important inaccuracies. The AI models can be impressive, but as we will see, they can also confidently present incorrect information. Throughout this process, my goal is to remain firmly in the driver's seat - the AI is my thinking partner, but I'm the one steering the analysis, challenging the responses, and making final judgments about the research.

In the next post, we will begin Step 4 of the process - presenting the targeted questions to multiple AI models, including OpenAI’s ChatGPT, Anthropic’s Claude 3.5 Sonnet, Google’s Notebook LM, and some of the newest open-source model’s include DeepSeek, and Alibaba’s Gwen 2.5-Max.